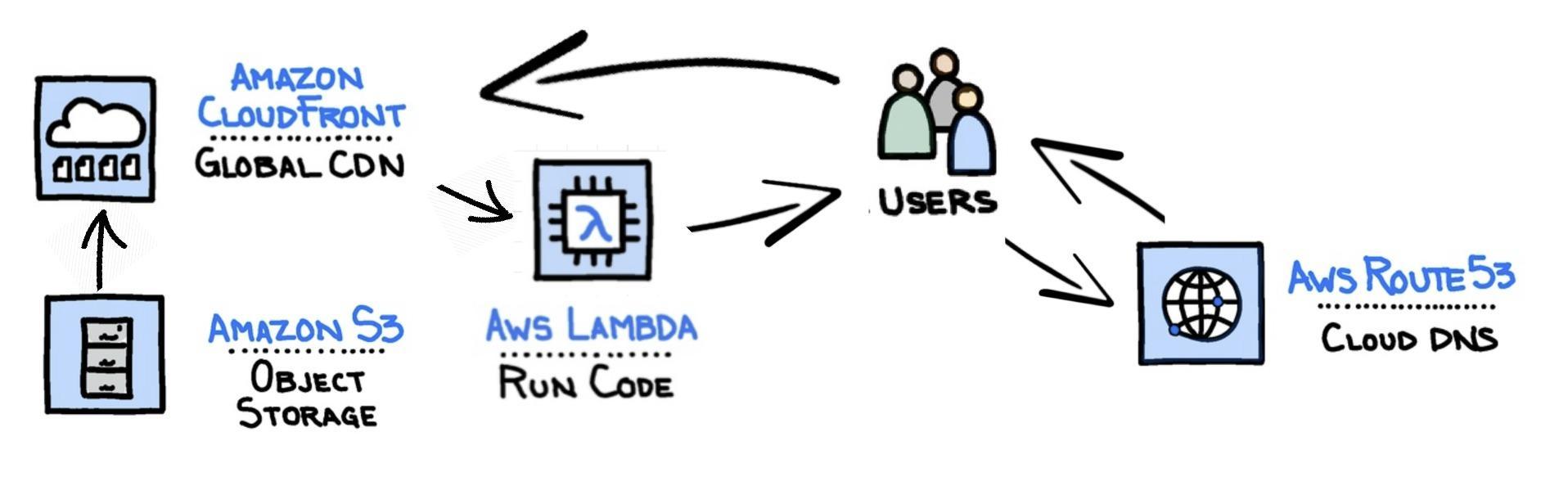

I wanted to create a platform for this blog that would be low cost and low maintenance. With that in mind, I’d settled on creating a static site hosted on AWS. The overall design would need to look something like this:

S3 would be used to store the site content, Route53 would handle the DNS and CloudFront would provide the ability to add SSL as well as enabling the use of Lambda@Edge (for adding security headers to our responses).

The next decision was to define the required infrastructure using Terraform. I could have created the platform simply by opening up the management console and creating the resources manually. I decided, however, that it was worth the upfront investment in defining the infrastructure as code, despite this being a small, solo project.

I also decided to host all resources inside the us-east-1 region. I did this as resources such as the SSL certificate, and the Lambda@Edge function need to be within that region. Given we’ve got CloudFront for global distribution, I didn’t see any reason to split resources across regions.

S3 Buckets

We’ll start with the core piece of the site, the S3 bucket that will store our site content.

resource "aws_s3_bucket" "site" {

bucket = "s3.${var.site_domain}"

acl = "private"

logging {

target_bucket = aws_s3_bucket.site_log_bucket.id

}

}

You’ll see above we’ve defined a bucket to store our logs, let’s create that next.

resource "aws_s3_bucket" "site_log_bucket" {

bucket = "${var.site_domain}-logs"

acl = "log-delivery-write"

lifecycle_rule {

enabled = true

expiration {

days = 90

}

}

}

CloudFront

Next, we’ll create a CloudFront distribution.

resource "aws_cloudfront_distribution" "s3_blog_distribution" {

origin {

domain_name = aws_s3_bucket.site.bucket_regional_domain_name

origin_id = "s3.${var.site_domain}"

s3_origin_config {

origin_access_identity = aws_cloudfront_origin_access_identity.origin_access_identity.cloudfront_access_identity_path

}

}

enabled = true

is_ipv6_enabled = true

comment = "Distribution for ${var.site_domain}"

default_root_object = "index.html"

logging_config {

include_cookies = false

bucket = "${aws_s3_bucket.cloudfront_bucket.id}.s3.amazonaws.com"

prefix = "blog"

}

aliases = var.alt_site_domain

default_cache_behavior {

target_origin_id = "s3.${var.site_domain}"

allowed_methods = ["GET", "HEAD"]

cached_methods = ["GET", "HEAD"]

forwarded_values {

query_string = false

cookies {

forward = "none"

}

}

lambda_function_association {

event_type = "origin-response"

lambda_arn = aws_lambda_function.lambda_edge_response.qualified_arn

include_body = false

}

viewer_protocol_policy = "redirect-to-https"

min_ttl = 0

default_ttl = 60

max_ttl = 60

}

restrictions {

geo_restriction {

restriction_type = "none"

}

}

viewer_certificate {

acm_certificate_arn = aws_acm_certificate.cert.arn

ssl_support_method = "sni-only"

minimum_protocol_version = "TLSv1.2_2018"

}

depends_on = [aws_acm_certificate.cert, aws_s3_bucket.cloudfront_bucket]

}

In the above distribution, we have referenced an Origin Access Identity (OAI). The OAI is akin to a CloudFront user. We’ll be referencing this in our S3 site bucket policy later. Let’s create the OAI for now.

resource "aws_cloudfront_origin_access_identity" "origin_access_identity" {

comment = "Identity used by ${var.site_domain} distribution"

}

We’ve also referenced another logging bucket, we need to create that too.

resource "aws_s3_bucket" "cloudfront_bucket" {

bucket = "${var.site_domain}-cloudfront"

acl = "private"

lifecycle_rule {

enabled = true

expiration {

days = 90

}

}

}

Remember we said we needed the OAI for our site bucket access policy? Let’s create the policy and attach it to the bucket now.

data "aws_iam_policy_document" "s3_site_policy" {

statement {

actions = ["s3:GetObject"]

resources = ["${aws_s3_bucket.site.arn}/*"]

principals {

type = "AWS"

identifiers = ["${aws_cloudfront_origin_access_identity.origin_access_identity.iam_arn}"]

}

}

statement {

actions = ["s3:ListBucket"]

resources = ["${aws_s3_bucket.site.arn}"]

principals {

type = "AWS"

identifiers = ["${aws_cloudfront_origin_access_identity.origin_access_identity.iam_arn}"]

}

}

}

resource "aws_s3_bucket_policy" "site_bucket_policy" {

bucket = aws_s3_bucket.site.id

policy = data.aws_iam_policy_document.s3_site_policy.json

}

Certificate

We want to serve our content via an encrypted channel (using TLS - still more commonly referred to as the now deprecated SSL). To achieve this, we need to create a certificate. We’ve already referenced this in the CloudFront distribution resource definition, so let’s create that next.

resource "aws_acm_certificate" "cert" {

domain_name = var.site_domain

subject_alternative_names = var.alt_site_domain

validation_method = "DNS"

lifecycle {

ignore_changes = [subject_alternative_names, id]

}

}

Once we’ve created a certificate, we need to prove we own the domain before it is validated. Part of this validation workflow is creating a resource to represent the successful validation of the certificate.

resource "aws_acm_certificate_validation" "cert" {

certificate_arn = aws_acm_certificate.cert.arn

validation_record_fqdns = [

"${aws_route53_record.validation_1.fqdn}",

"${aws_route53_record.validation_2.fqdn}",

]

lifecycle {

ignore_changes = [

id,

]

}

}

Route53

Next up, DNS. As mentioned earlier, we need to create DNS records to prove we have control of the domain before a certificate becomes available to attach to our CloudFront distribution. Let’s do that now.

resource "aws_route53_zone" "zone" {

name = var.site_domain

}

resource "aws_route53_record" "validation_1" {

name = aws_acm_certificate.cert.domain_validation_options.0.resource_record_name

type = aws_acm_certificate.cert.domain_validation_options.0.resource_record_type

zone_id = aws_route53_zone.zone.id

records = ["${aws_acm_certificate.cert.domain_validation_options.0.resource_record_value}"]

ttl = "60"

allow_overwrite = true

}

resource "aws_route53_record" "validation_2" {

name = aws_acm_certificate.cert.domain_validation_options.1.resource_record_name

type = aws_acm_certificate.cert.domain_validation_options.1.resource_record_type

zone_id = aws_route53_zone.zone.id

records = ["${aws_acm_certificate.cert.domain_validation_options.1.resource_record_value}"]

ttl = "60"

allow_overwrite = true

}

We also need to create a CNAME record pointing www.martintyrer.com to martintyrer.com.

resource "aws_route53_record" "apex_cname" {

zone_id = aws_route53_zone.zone.zone_id

name = "www.${var.site_domain}."

type = "CNAME"

records = ["${var.site_domain}."]

ttl = "3600"

}

Along with an A record pointing martintyrer.com to our CloudFront distribution.

resource "aws_route53_record" "www" {

zone_id = aws_route53_zone.zone.zone_id

name = var.site_domain

type = "A"

alias {

name = replace(aws_cloudfront_distribution.s3_blog_distribution.domain_name, "/[.]$/", "")

zone_id = aws_cloudfront_distribution.s3_blog_distribution.hosted_zone_id

evaluate_target_health = true

}

depends_on = [aws_cloudfront_distribution.s3_blog_distribution]

}

Lambda

We decided earlier we wanted to use Lambda@Edge to add security headers to our site responses. We’ve already referenced this function in the CloudFront resource definition so next we’ll define the function.

resource "aws_lambda_function" "lambda_edge_response" {

filename = data.archive_file.package_lambda_response.output_path

function_name = "lambda_edge_add_security_headers"

role = aws_iam_role.lambda_edge_exec.arn

handler = "add_headers.handler"

publish = true

source_code_hash = data.archive_file.package_lambda_response.output_base64sha256

runtime = "nodejs10.x"

}

You’ll see we’ve referenced the location of a file we’ll be using to create the function. The file is an archive file that will be created by the following resource.

data "archive_file" "package_lambda_response" {

type = "zip"

source_file = "lambda/add_headers.js"

output_path = "lambda/add_header.zip"

}

The above resource packages the function code located at lambda/add_headers.js. The code is as follows:

'use strict';

exports.handler = async (event) => {

console.log('Event: ', JSON.stringify(event, null, 2));

const response = event.Records[0].cf.response;

response.headers['strict-transport-security'] = [{ value: 'max-age=31536000; includeSubDomains' }];

response.headers['content-security-policy'] = [{ value: " script-src 'self'; style-src 'self' https://fonts.googleapis.com/ 'unsafe-inline'; img-src 'self'; object-src 'none'; report-uri 'https://martintyrer.report-uri.com/r/d/csp/wizard'"}];

response.headers['x-xss-protection'] = [{ value: '1; mode=block' }];

response.headers['x-content-type-options'] = [{ value: 'nosniff' }];

response.headers['x-frame-options'] = [{ value: 'DENY' }];

response.headers['referrer-policy'] = [{ value: 'no-referrer' }];

response.headers['feature-policy'] = [{ value: "geolocation 'none'; midi 'none'; notifications 'none'; push 'none'; sync-xhr 'none'; microphone 'none'; camera 'none'; magnetometer 'none'; gyroscope 'none'; speaker 'self'; vibrate 'none'; fullscreen 'self'; payment 'none';" }];

response.headers['expect-ct'] = [{ value: 'max-age=86400, enforce, report-uri="https://martintyrer.report-uri.com/r/d/ct/enforce"' }];

return response;

};

Finally, lets sort out the permissions and create the role that is needed for our function.

resource "aws_iam_role" "lambda_edge_exec" {

assume_role_policy = <<EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Action": "sts:AssumeRole",

"Principal": {

"Service": ["lambda.amazonaws.com", "edgelambda.amazonaws.com"]

},

"Effect": "Allow"

}

]

}

EOF

}

Terraform apply

We’ve now run Terraform apply and have the infrastructure in place to host our site. In the next post we’ll be covering the creation of the blog.

Comments

comments powered by Disqus