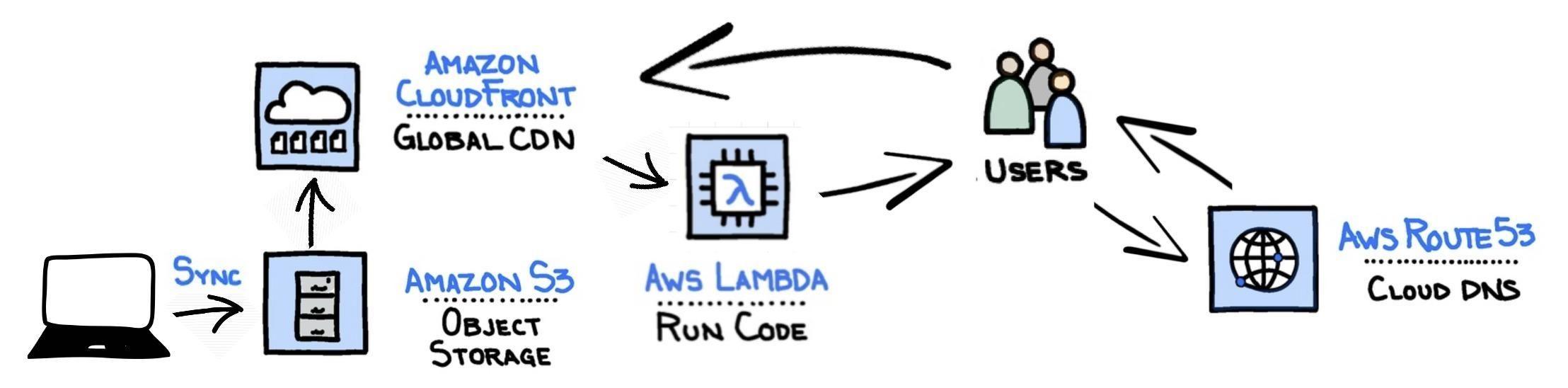

Now we’ve created the platform detailed here, we need to address a vital piece of the solution, we need to put our blog content into our site S3 bucket. We’ll be adding to our existing design with the following:

I initially experimented with creating a local instance of the blog using Ghost in a Docker container and using HTTrack to create a static copy. I’d then sync this to the S3 bucket. This presented a few quirks that needed addressing.

Whilst I was resolving one of these issues I discovered the existence of static site generators such as Jekyll and Hugo. After doing a bit of research, I decided to switch to using Jekyll as it was a better fit for our static platform.

The setup guide is a good place to start if you are considering creating a site in a similar way. Where I strayed from this guidance was to avoid a local installation and to opt for using the Docker approach detailled here.

After experimenting with creating a site from scratch I decided to grab a template from here as a starting point. Once downloaded, I ran the following from inside the folder:

docker run --rm --volume="$PWD:/srv/jekyll" --volume="$PWD/vendor/bundle:/usr/local/bundle" -p 4000:4000 -it jekyll/jekyll:latest jekyll serve

Visiting 127.0.0.1:4000 confirmed we had a Jekyll site available. All that was left was to work through the README document included.

Fast forward [some time], and we’ve configured our site, modified the template, and created two blog posts (this post plus the post on building the platform). Now all that remains is to get the site into production.

We run a final build:

docker run --rm --volume="$PWD:/srv/jekyll" --volume="$PWD/vendor/bundle:/usr/local/bundle" -p 4000:4000 -it jekyll/jekyll:latest jekyll build

Then upload the generated files (in the “_site” directory) to our S3 bucket:

aws s3 sync _site/ s3://s3.martintyrer.com --delete

Accessing martintyrer.com, we see the blog. But we can’t navigate properly…why? Local testing had not exhibited these symtpoms. Well, the problem stems from the way Jekyll content is created compared to the way a CloudFront static site behaves when serving content from an S3 bucket. With CloudFront, we can’t set a default file on a subdirectory. Therefore, if we have a URL “https://martintyrer.com/tag/terraform/”, we can’t tell CloudFront to serve index.html from within this directory (https://martintyrer.com/tag/terraform/index.html being the resource location). Similarly, posts are linked within the site using their “pretty” form, e.g. “https://martintyrer.com/Building-the-blog-platform” when the actual content exists at “https://martintyrer.com/Building-the-blog-platform.html”.

There are many potential solutions to this, but I decided to opt for creating a second Lambda@Edge function to rewrite requests before passing them to our origin (S3).

resource "aws_lambda_function" "lambda_edge_request" {

filename = data.archive_file.package_lambda_request.output_path

function_name = "lambda_edge_modify_requests"

role = aws_iam_role.lambda_edge_exec.arn

handler = "rewrite_requests.handler"

publish = true

source_code_hash = data.archive_file.package_lambda_request.output_base64sha256

runtime = "nodejs10.x"

}

You’ll see we’ve referenced the location of a file we’ll be using to create the function. The file is an archive file that will be created by the following resource.

data "archive_file" "package_lambda_request" {

type = "zip"

source_file = "lambda/rewrite_requests.js"

output_path = "lambda/rewrite_requests.zip"

}

The source file above contains the following code (which was taken from this blog post ). This function checks the request and modifies it to match the content as it’s named in our S3 bucket.

'use strict';

const pointsToFile = uri => /\/[^/]+\.[^/]+$/.test(uri);

const hasTrailingSlash = uri => uri.endsWith('/');

exports.handler = (event, context, callback) => {

var request = event.Records[0].cf.request;

const olduri = request.uri;

const qs = request.querystring;

if (pointsToFile(olduri)) {

callback(null, request);

return;

}

if (!hasTrailingSlash(olduri)) {

request.uri = olduri + ".html";

} else {

request.uri = olduri + "index.html";

}

return callback(null, request);

};

After another “terraform apply” and a short wait for the CloudFront distribution to deploy the new function, we can now browse the site as expected!

In the next post, I’ll cover how we can automatically build our site using CodeBuild whenever we push new content to GitHub.

Comments

comments powered by Disqus